Best Brands for Sustainable Basics

Ethical Considerations in AI Development

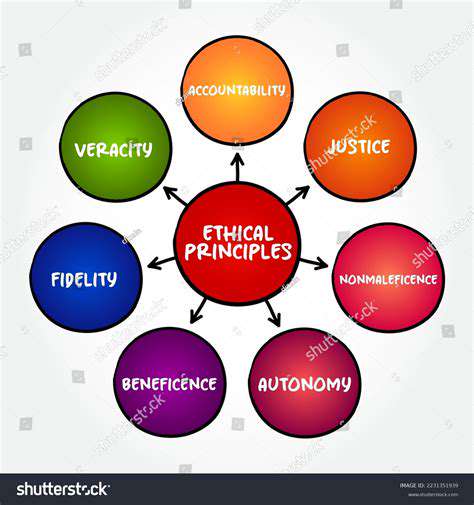

Artificial intelligence is rapidly transforming various aspects of our lives, from healthcare to finance. This rapid advancement necessitates a strong emphasis on ethical considerations in AI development. We must ensure that AI systems are designed and deployed in a way that aligns with human values and promotes fairness, transparency, and accountability. This involves careful consideration of potential biases in data and algorithms, and proactive measures to mitigate their impact.

A crucial aspect of this is understanding the potential societal implications of AI. The development and implementation of AI systems can have profound effects on employment, privacy, and even human dignity. Therefore, a thorough ethical framework is essential to guide the responsible development and use of AI technologies.

Bias Mitigation in AI Algorithms

AI systems learn from data, and if that data reflects existing societal biases, the AI system will likely perpetuate those biases. For example, if a dataset used to train an image recognition system predominantly features images of people of one race, the system may perform poorly on images of people of other races. This is a critical issue that needs to be addressed to ensure fairness and equity in the application of AI.

Developing robust methods for detecting and mitigating bias in datasets and algorithms is paramount. Techniques such as data augmentation, algorithmic adjustments, and incorporating diverse datasets are necessary steps in this process. Continuous monitoring and evaluation of AI systems are also vital to ensure that bias is not inadvertently introduced or amplified over time.

Transparency and Explainability in AI Systems

Many AI systems, particularly those based on deep learning, operate as black boxes, making it difficult to understand how they arrive at their decisions. This lack of transparency can undermine trust and create concerns about accountability. Understanding the decision-making processes of AI systems is crucial for ensuring fairness and addressing potential errors.

Techniques like explainable AI (XAI) aim to provide insights into the reasoning behind AI decisions. This allows for better understanding and potentially reduces unintended consequences. Promoting transparency in AI development is a critical step towards fostering public trust and responsible use.

Accountability and Responsibility in AI Deployment

As AI systems become more integrated into critical aspects of our lives, defining clear lines of accountability for their actions becomes increasingly important. Who is responsible when an AI system makes a mistake or causes harm? Addressing this question requires a multi-faceted approach, encompassing legal frameworks, industry standards, and ethical guidelines.

Establishing clear guidelines and procedures for the oversight and management of AI systems is essential. This includes establishing mechanisms for reporting errors, addressing complaints, and implementing corrective actions. Ultimately, fostering a culture of accountability in AI development is crucial for building trust and preventing potential misuse.

Ethical Implications of AI in Healthcare

AI has the potential to revolutionize healthcare, improving diagnostics, treatment planning, and patient care. However, ethical considerations are paramount. Ensuring patient privacy and data security is crucial in the context of AI-driven healthcare solutions. AI systems should be designed and implemented in a way that protects sensitive patient information and adheres to strict data privacy regulations.

Bias in AI algorithms used for medical diagnosis or treatment could lead to significant disparities in healthcare access and outcomes. Therefore, meticulous attention to bias mitigation is critical in this domain. This includes ensuring diverse and representative datasets in AI training and ongoing evaluation to identify and address potential biases.

The Future of Ethical AI Development

The rapid advancement of AI necessitates ongoing dialogue and collaboration among ethicists, researchers, policymakers, and the public. Continuous refinement of ethical frameworks and guidelines is crucial to keep pace with the evolving landscape of AI technology. We need to actively engage in discussions about the societal impact of AI and develop strategies for mitigating potential risks.

Establishing international collaborations and standards for ethical AI development is vital to ensure consistency and prevent a fragmented approach. This shared responsibility will help guide AI's evolution in a manner that benefits humanity as a whole.

Read more about Best Brands for Sustainable Basics

Hot Recommendations

- Grooming Tips for Your Bag and Wallet

- Best Base Coats for Nail Longevity

- How to Treat Perioral Dermatitis Naturally

- How to Use Hair Rollers for Volume

- How to Do a Graphic Eyeliner Look

- Best DIY Face Masks for Oily Skin

- Guide to Styling 4C Hair

- Guide to Improving Your Active Listening Skills

- How to Fix Cakey Foundation

- Best Eye Creams for Wrinkles

![Review: [Specific Brand] Denim Line Is It Worth It?](/static/images/29/2025-05/StyleandFit3ACateringtoVariousBodyTypes.jpg)